SPIRIT partners designed a set of use cases to validate and test the features of the SPIRIT platform.

Use case #1: Multi-Source Live Teleportation with 5G MEC Support

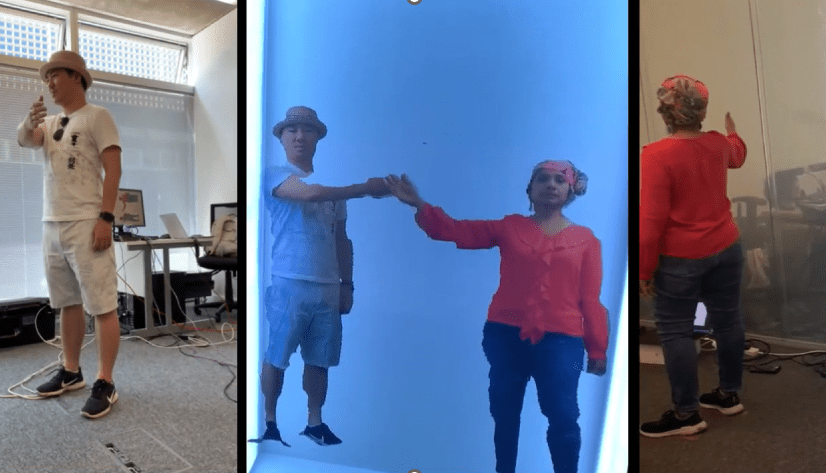

Use Case #1, led by the University of Surrey as part of the SPIRIT project, involves live teleportation of people from different Internet locations into a shared virtual audience space so that the audience has the immersive perception that everyone is in the same physical scene. One application scenario is distributed virtual performances, where actors can physically perform (e.g. dance) in different locations, but their live holograms can be simultaneously teleported to a “virtual stage” where the audience can enjoy the entire performance event, which consists of virtual holograms of real performers from different remote locations.

In addition to the traditional network requirements for supporting teleportation applications, – such as high bandwidth -, data synchronisation will be critical in supporting multi-source teleportation operations to ensure user quality of experience (QoE). To avoid perceived motion misalignment between source objects, teleportation content frames originating from each source object with the same frame creation time must arrive at the receiver side within a strict time window. Human tolerance for such motion misalignment is expected to vary depending on the application scenario. For example, in the case of casual chatting with minimal body movement, frame misalignment may not be a significant issue. However, in other scenarios with more body movement, motion misalignment is more likely to be detected by the human eye on the viewer’s side. There are several factors in the operational environment that can contribute to motion misalignment, such as network distance/latency, path load conditions, frame production conditions, and end-to-end transport layer mechanisms used.

Implementation

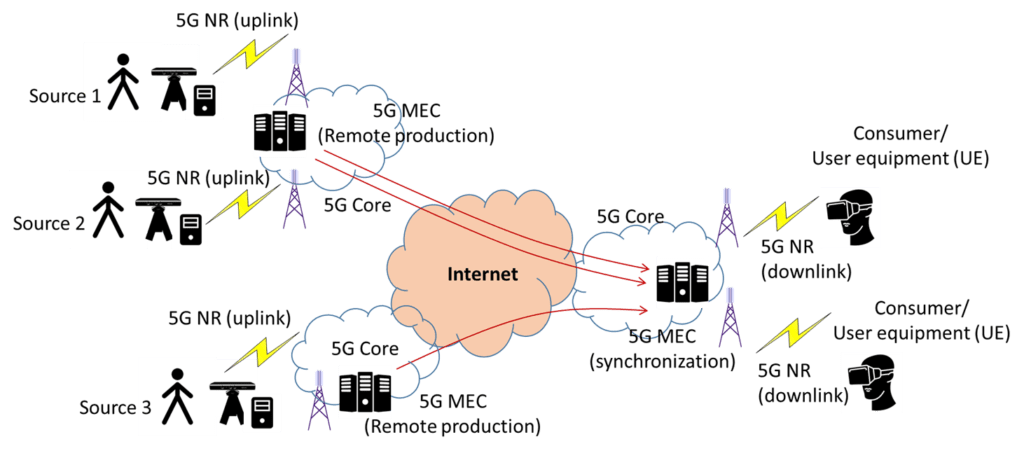

Figure 1 below shows the overall illustrative framework for end-to-end network support of multi-source teleportation applications based on the open-source platform of LiveScan3D [1]. For each specific source (e.g. person to be captured and teleported), multiple sensor cameras (e.g. Kinect Azure DK cameras) are used to capture the object from different directions, and each camera is connected to a local PC, called client, which is responsible for local processing of the raw content data. The pre-processed local data is then streamed to the production server, which is responsible for integrating frames produced by different clients for the same captured object. The functionality of the production server can be optionally supported by 5G multi-access edge computing (MEC). As shown in Figure 2, a common 5G MEC can be used to provide remote production services to all regional clients. In this case, local frames from individual clients are streamed in real-time to the local 5G MEC via the 5G new radio (NR) uplink.

On the receiver (content consumer) side, the 5G MEC can be used to perform real-time synchronisation on the incoming frames originated from multiple sources at different locations. The purpose is to eliminate possible consumer-perceivable motion-misalignment effects caused by uncertainties of frame arrival time. Such uncertainties can be caused by a wide range of factors such as path distances and conditions from different sources to the consumer side, as well as the working load of remote clients. The frame synchronisation operation is typically based on a simple algorithm for frame pairing approximation based on the timestamp embedded in each incoming frame. Finally, the synchronised frames by the local MEC can be streamed in real-time to local consumer devices such as Microsoft HoloLens devices through 5G (downlink) new radio (Tethering required).

Use case #2: Real-Time Animation and Streaming of Realistic Avatars

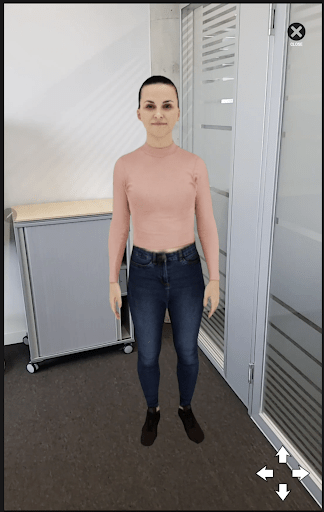

Increasing advances in real-time communications offer valuable opportunities to develop applications that represent users in a much more immersive way than has been done in the past. To this end, realistic volumetric representation of people has become an important topic of research in recent years because, in combination with Mixed Reality (MR) devices, it can guarantee a better overall immersive experience.

However, such applications present significant technical challenges that must be overcome to ensure smooth and stable communication. The first issues to consider are the considerable amount of data that a photorealistic avatar comprises and the variability of network conditions. In addition, the animation of the three-dimensional object must be done in real-time, taking any sort of media (audio, video, text, 6DoF position) as input. This adds an extra level of flexibility to the volumetric display.

Use Case #2 led by Fraunhofer HHI in the framework of the SPIRIT project, proposes a scenario where the avatar is animated by an animation library using a neural network. The input to this network is media captured on a mobile device. The rendering of the object is split between a cloud server and the final device to reduce the amount of data transmitted. Congestion control algorithms ensure low network latency. The final device integrates the avatar into the real world and allows the user to interact with it. Video [1] shows an example of the application.

Implementation

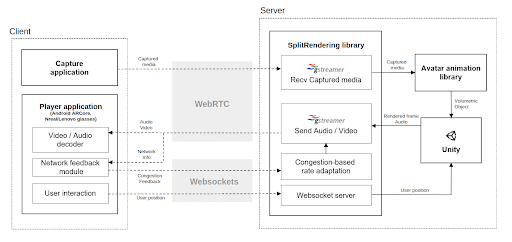

The cloud-based streaming for avatar animation shifts the computationally intense rendering from the client to the edge server while providing a reliable interaction to users through low latency streaming. The SplitRendering library [2] on the server manages to transport media/user metadata via Websockets and WebRTC over the network. It receives the captured media (i.e., recorded audio) from the client and feeds it into the avatar animation library, a media-driven component that generates non-pre-captured real-time interactive animation. The rendering engine (Unity) renders the interactive avatar and generates a 2D image of the 3D object from the proper angle, according to the current position of the final user. This 2D image is then compressed by a hardware encoder (e.g., NVEnc) and transmitted to the client.

The Player application on the client renders the 2D video synchronized from the user viewport on AR / VR mobile devices by leveraging existing 2D video decoding codecs such as h.264-5. The original solid color present in the background of the rendered image is here removed, integrating the avatar in the room as if it was really there. At the same time, it sends information about the position and rotation of the avatar to the server, in order to synchronise the rendering view and provide the feeling that a 3d object is being rendered in the client, although only 2d images are being received, therefore reducing the required bandwidth significantly. On this application, the user can also interact with the avatar by scaling it and adjusting its position. The avatar addresses the user by adjusting the head rotation to ensure that there is natural eye contact in the scene.

The network space is considered to be in a mobile network with the bottleneck being the access link between the mobile devices and the base station with ECN marking to signal congestion severity to the client. The server adjusts the encoding bitrate based on a rate adaptation algorithm (e.g. L4S) with the congestion feedback sent by the client.

Use and improve the SPIRIT platform by participating in the Open Calls